by Erlend Predergast — Jul 3, 2019

Last year Erlend Prendergast worked as an intern for the Design Thinkers Academy London. After finishing his internship, Erlend went back to the University of Glasgow for his final year. He began working on a project looking into data privacy and digital surveillance. Here Erlend writes about the project and how it works to disrupt Amazon’s virtual assistant – Alexa.

During my time at DK&A and Design Thinkers Academy London I had the opportunity to see how we can use design to address some of society’s biggest problems. I contributed to the launch of The Plastics Cloud at London Design Festival, and I was able to take part in a design sprint with the Ministry of Justice surrounding the topic of child sexual exploitation. These projects saw two very different global issues come under scrutiny. However, at the core of both of these projects was an an ambition to create awareness and affect change.

As a result, when I returned to Glasgow to embark on the final year of my product design degree, I was inspired to think big. The focus of my interest became how I could use designed objects as a means to explore, examine and interrogate complex societal issues. I have always been interested in design-for-debate, and this is exactly what I set out achieve when I developed my graduate project. So, I began to I explore ideas surrounding data privacy and digital surveillance.

People frequently use the “Big Brother” analogy when discussing surveillance, but Orwell’s model of authoritarian state surveillance isn’t exactly an appropriate metaphor for the kind of surveillance we see today.

Thanks in part to the Internet of Things, surveillance today is multifaceted, permeating its way into all of our digital interactions. Our personal data constitutes invaluable information capital, and as a result of disclosures such as Snowden’s NSA leaks and the Cambridge Analytica scandal, we are beginning to see how data collection can lead to consequences on a massive scale. Once more, disclosures such as these have galvanised the public discourse surrounding surveillance, and people have become rightfully paranoid about how their data might be used.

The Alexa project

The Alexa project

In order to understand how this paranoia surrounding surveillance impacts our relationship with technology, I carried out user research in the form of interviews and home visits. The most interesting and humorous of the insights centred around virtual assistants – and specifically, Alexa. As there are no laws requiring companies such as Amazon to disclose the algorithms used by their virtual assistants, there is technically no way for an Alexa user to understand how the device operates and how value might be derived from the data that it generates.

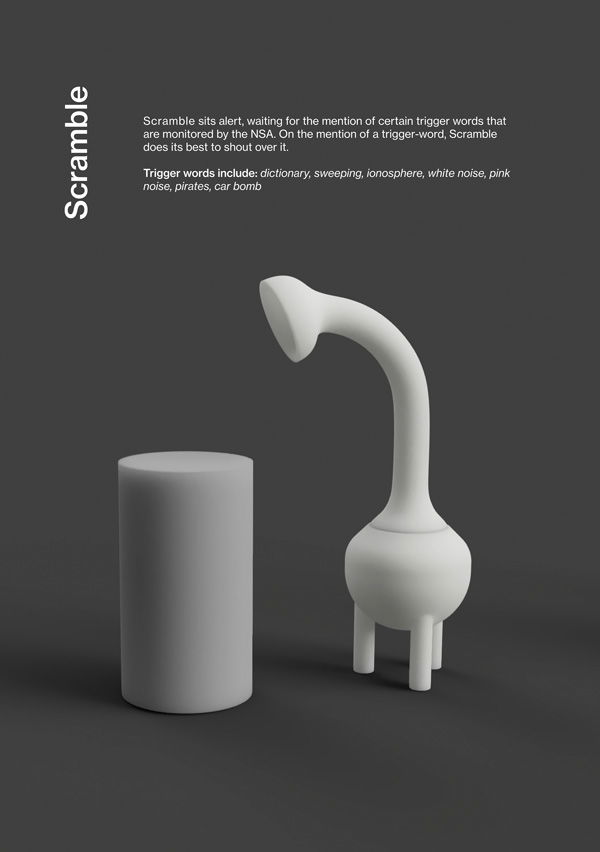

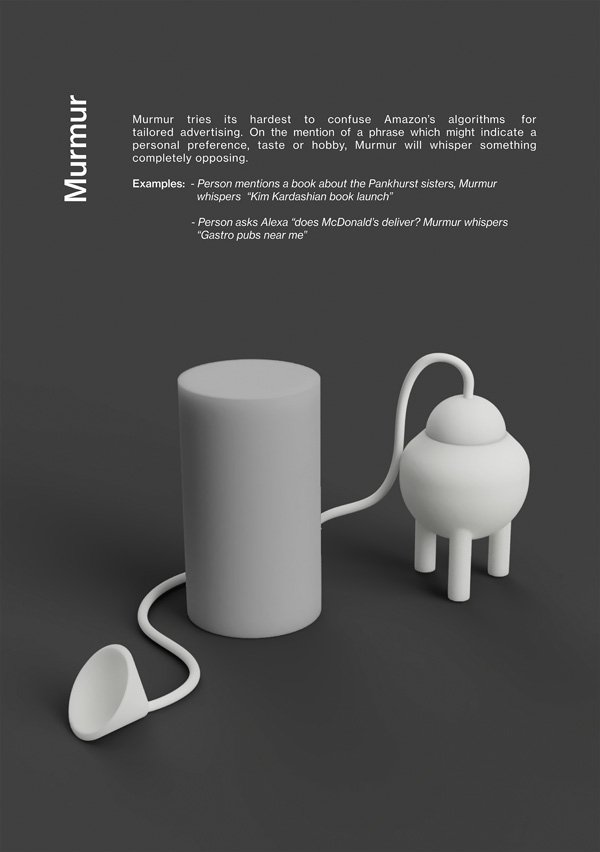

In order to emphasise the absurdity of these knowledge barriers, I created a series of hypothetical scenarios to illustrate how an Alexa user’s data could be leveraged by corporations, governments and other individuals. Rather than creating an alternative to Alexa or redesigning it entirely, I felt that it’d be more impactful to create a device that could be used alongside Alexa. This device would confuse or mislead its algorithms, as a means for the user to ensure their privacy.

The outcome of this was a product named CounterBug. It consisted of three different accessories based on different forms of surveillance paranoia outlined in my research. I worked iteratively to create the forms of these accessories by playing with and altering the form of Amazon’s Echo speaker, jumping back-and-forth between digital and physical prototyping.

Each attachment has a completely different character depending on its function. One censors the user’s language so they don’t get in trouble with the NSA. One disrupts Amazon’s tailored advertising algorithms. And lastly, one chats to Alexa about virtuous topics whilst they are out the house.

There is an inherent irony in this project, being that the best approach to defend oneself against privacy-infringing virtual assistants, is obviously not use one at all. What CounterBug proposes is an alternative, and somewhat satirical approach to digital self-defence – one which allows the user to take back their privacy, without saying goodbye to Alexa.

Since finishing this project, I went on to graduate with a First-Class Honours in Product Design, and to win the Glasgow School of Art’s Innovation Design Prize. Following our degree show, the project was published in Dezeen magazine and a series of other magazines and blogs across the world – both online and in print. This platform has allowed me the opportunity to bring a complex idea to life in a way that is accessible and tangible, which is everything that I could have hoped to achieve.